18 February 2021, Iris Proff

Look at the picture above – what do you see? When this task was given to participants in an experiment at the University of Tilburg, they came up with these descriptions:

“Uhm, a lot of sheep.”

“A large group of sheep and goats that, uhm, are led by a donkey and a man.”

“A landscape with mountains and some houses and a flock of sheep in the foreground.”

Each of these descriptions is unique and focuses on different aspects of the picture – yet they are all accurate. What might determine which description a person chooses? Research suggests that the way we look at an image influences how we perceive and describe it.

When participants in a study from 2007 described pictures showing two agents – such as a dog chasing a man or two people shaking hands – their descriptions were more likely to start with the agent they initially looked at. By manipulating where participants looked first, the experimenters could influence what they would say.

When we describe what we see, the seemingly disparate cognitive processes of language production and vision intertwine. But how exactly seeing and speaking align is not known. To address this question, the Dialogue Modelling Group at the ILLC, led by Raquel Fernández, builds observations from human behavior into algorithms that automatically generate descriptions for images. This leads not only to more human-like automatic descriptions, but also to a deeper understanding of how vision and language may interact in the human brain.

Automatic image captioning

Automatic image captioning is an interesting task for the AI research community, as it combines the two prime applications of neural networks: computer vision and natural language processing. It poses a challenge that goes well beyond the typical dog-or-cat image classification. It tests the computer’s ability to judge what an image essentially shows – what is important and what is not. An easy task for humans, but a very hard one for machines.

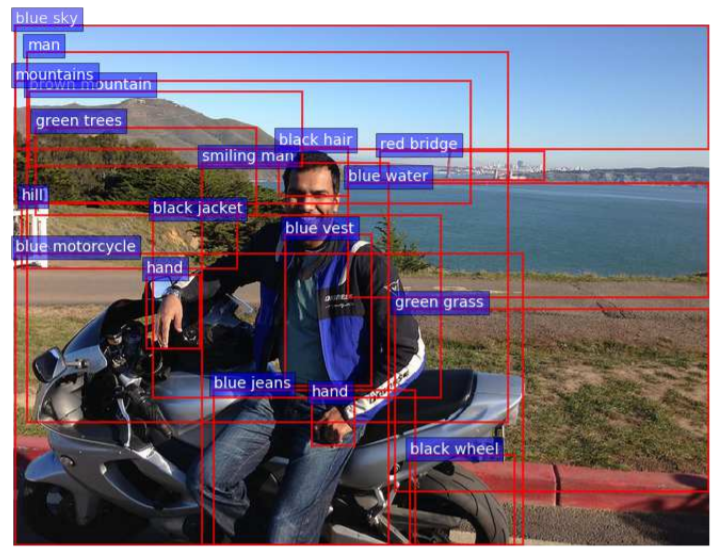

A state-of-the-art image captioning system such as the one proposed by Anderson and colleagues in 2018 works as follows. First, a neural network recognizes individual objects: a sheep, another sheep, a man, a mountain. Second, an attention component determines which of these objects are most relevant to the description. Inspired by visual processing in humans, the algorithm draws attention to areas with salient visual features such as high contrast or bright colors, and to areas containing objects of interest like faces or written letters.

Finally, a language model produces a sentence that describes these objects and how they relate to each other. This model aims to mimic how humans describe images and the visual world in general. However, while humans look at different parts of an image as they are describing it – sequentially, one object at a time – the computer model is fed all visual information at once.

Models that gaze at pictures

To overcome this limitation, Ece Takmaz, Sandro Pezzelle and Raquel Fernández at the ILLC and Lisa Beinborn at the VU Amsterdam expanded the state-of-the-art image captioning model. They made it look at pictures like humans do – in sequence. “We take inspiration from cognitive science to make our models more human-like”, says Ece.

When learning a picture-description pair, image captioning models receive the words a participant uttered one by one. Along with each word, the expanded model only sees the section of the image the participant fixated at that moment – like a human and unlike the original model, which sees the whole image at once.

The researchers trained and tested their model on the Dutch Image Description and Eye-tracking Corpus, a dataset collected at the University of Tilburg, that contains images, spoken descriptions and eye-tracking data.

Does this novel method come up with better descriptions? Notably, for algorithms that generate language it is not obvious what “better” means. There is not a single correct description for an image, just as there is not a single correct short story, text summary or email reply. To measure how well such models perform, researchers usually measure how similar the model output is to the output of a human doing the same task.

According to such a metric, the gaze-driven model performs better than the model by Anderson and colleagues. That is, it generates more human-like image descriptions, regarding both content and structure.

Human-like image descriptions

What is it that makes the model more human-like? The researchers identify three key features. First, the model repeats less. “Repetition is something that is very difficult to get rid of in language generation models in general” says Raquel Fernández. “Models come up with descriptions like ‘a man eating a pizza pizza pizza.’” The gaze-driven model tends to reduce such unnecessary repetitions.

Second, the gaze-driven model has a larger vocabulary and uses more specific words. When people focus on particular details in a picture, the model is likely to incorporate those in the description. “Still, our model lacks behind the rich vocabulary used by human speakers” says Sandro Pezzelle.

Finally, the gaze-driven model captures speech disfluencies: “uhms”, hesitations and corrections that speakers make. The picture on the right shows a particularly hard to describe scene, which is reflected not only in people’s descriptions, but also in their gaze patterns. The gaze-driven model captures this difficulty and comes up with descriptions such as “a photo of a street, uhm, with some birds”, but also “uhm, uhm, uhm, uhm and some birds”. Interestingly, people seem to perceive artificial dialogue systems using filler words and corrections as more pleasant to interact with than systems that stop speaking whenever they encounter an uncertainty.

Descriptions that are more human-like are not necessarily more accurate or better suited for practical applications of image captioning. “We are not trying to produce the most efficient image caption”, says Raquel Fernández. “We rather try to reproduce the process by which humans describe images.”

Her team’s work confirms that vision and speech production align and that this alignment is not simple – often people look at things which they don’t (immediately) mention. The research also shows that incorporating multiple modalities and cognitive signals into AI tools is a promising pathway to make them better.

The important choice of language

“The long-term goal of this type of research is not just to describe photographs, but to connect the extra-linguistic world to language”, says Raquel Fernández. It could be the starting point in the development of advanced tools for visually impaired people, or intelligent dialogue systems that assist you while driving a car.

When it comes to such practical applications, it is crucial that these are available in many languages. But the vast majority of research in the field is conducted on English data. The Dutch language dataset chosen by the researchers at the ILLC thus posed a challenge, as models pre-trained on Dutch language are rare. However, this seems to be slowly changing as natural language processing research expands to more languages, the scientists point out.

Also from a scientific perspective, research into different languages is valuable. “In Turkish – my mother tongue – the order of words is very flexible. We can shuffle things around”, says Ece Takmaz. “Maybe the alignment between vision and language proceeds in a different way here.” In the near future, the researchers plan to investigate such cross-linguistic differences.